A little over two years ago, a tiny piece of code was introduced to the internet that contained a bug. This bug was known as Heartbleed, and in the two years it has taken for the world to recognize its existence, it has caused quite a few headaches. In addition to allowing cybercriminals to steal passwords and usernames from Yahoo, it has also allowed people to steal from online bank accounts, infiltrate governments institutions (such as Revenue Canada), and generally undermine confidence in the internet.

A little over two years ago, a tiny piece of code was introduced to the internet that contained a bug. This bug was known as Heartbleed, and in the two years it has taken for the world to recognize its existence, it has caused quite a few headaches. In addition to allowing cybercriminals to steal passwords and usernames from Yahoo, it has also allowed people to steal from online bank accounts, infiltrate governments institutions (such as Revenue Canada), and generally undermine confidence in the internet.

What’s more, in an age of cyberwarfare and domestic surveillance, its appearance would give conspiracy theorists a field day. And since it was first disclosed a month to the day ago, some rather interesting theories as to how the NSA and China have been exploiting this to spy on people have surfaced. But more on that later. First off, some explanation as to what Heartbleed is, where it came from, and how people can protect themselves from it, seems in order.

First off, Heartbleed is not a virus or a type of malware in the traditional sense, though it can be exploited by malware and cybercriminals to achieve similar results. Basically, it is a security bug or programming error in popular versions of OpenSSL, a software code that encrypts and protects the privacy of your password, banking information and any other sensitive data you provide in the course of checking your email or doing a little online banking.

First off, Heartbleed is not a virus or a type of malware in the traditional sense, though it can be exploited by malware and cybercriminals to achieve similar results. Basically, it is a security bug or programming error in popular versions of OpenSSL, a software code that encrypts and protects the privacy of your password, banking information and any other sensitive data you provide in the course of checking your email or doing a little online banking.

Though it was only made public a month ago, the origins of the bug go back just over two years – to New Year’s Eve 2011, to be exact. It was at this time that Stephen Henson, one of the collaborators on the OpenSSL Project, received the code from Robin Seggelmann – a respected academic who’s an expert in internet protocols. Henson reviewed the code – an update for the OpenSSL internet security protocol — and by the time he and his colleagues were ringing in the New Year, he had added it to a software repository used by sites across the web.

What’s interesting about the bug, which is named for the “heartbeat” part of the code that it affects, is that it is not a virus or piece of malware in the traditional sense. What it does is allow people the ability to read the memory of systems that are protected by the bug-affected code, which accounts for two-thirds of the internet. That way, cybercriminals can get the keys they need to decode and read the encrypted data they want.

What’s interesting about the bug, which is named for the “heartbeat” part of the code that it affects, is that it is not a virus or piece of malware in the traditional sense. What it does is allow people the ability to read the memory of systems that are protected by the bug-affected code, which accounts for two-thirds of the internet. That way, cybercriminals can get the keys they need to decode and read the encrypted data they want.

The bug was independently discovered recently by Codenomicon – a Finnish web security firm – and Google Security researcher Neel Mehta. Since information about its discovery was disclosed on April 7th, 2014, The official name for the vulnerability is CVE-2014-0160.it is estimated that some 17 percent (around half a million) of the Internet’s secure web servers that were certified by trusted authorities have been made vulnerable.

Several institutions have also come forward in that time to declare that they were subject to attack. For instance, The Canada Revenue Agency that they were accessed through the exploit of the bug during a 6-hour period on April 8th and reported the theft of Social Insurance Numbers belonging to 900 taxpayers. When the attack was discovered, the agency shut down its web site and extended the taxpayer filing deadline from April 30 to May 5.

Several institutions have also come forward in that time to declare that they were subject to attack. For instance, The Canada Revenue Agency that they were accessed through the exploit of the bug during a 6-hour period on April 8th and reported the theft of Social Insurance Numbers belonging to 900 taxpayers. When the attack was discovered, the agency shut down its web site and extended the taxpayer filing deadline from April 30 to May 5.

The agency also said it would provide anyone affected with credit protection services at no cost, and it appears that the guilty parties were apprehended. This was announced on April 16, when the RCMP claimed that they had charged an engineering student in relation to the theft with “unauthorized use of a computer” and “mischief in relation to data”. In another incident, the UK parenting site Mumsnet had several user accounts hijacked, and its CEO was impersonated.

Another consequence of the bug is the impetus it has given to conspiracy theorists who believe it may be part of a government-sanctioned ploy. Given recent revelations about the NSA’s extensive efforts to eavesdrop on internet activity and engage in cyberwarfare, this is hardly a surprise. Nor would it be the first time, as anyone who recalls the case made for the NIST SP800-90 Dual Ec Prng program – a pseudorandom number generator is used extensively in cryptography – acting as a “backdoor” for the NSA to exploit.

Another consequence of the bug is the impetus it has given to conspiracy theorists who believe it may be part of a government-sanctioned ploy. Given recent revelations about the NSA’s extensive efforts to eavesdrop on internet activity and engage in cyberwarfare, this is hardly a surprise. Nor would it be the first time, as anyone who recalls the case made for the NIST SP800-90 Dual Ec Prng program – a pseudorandom number generator is used extensively in cryptography – acting as a “backdoor” for the NSA to exploit.

In that, and this latest bout of speculation, it is believed that the vulnerability in the encryption itself may have been intentionally created to allow spy agencies to steal the private keys that vulnerable web sites use to encrypt your traffic to them. And cracking SSL to decrypt internet traffic has long been on the NSA’s wish list. Last September, the Guardian reported that the NSA and Britain’s GCHQ had “successfully cracked” much of the online encryption we rely on to secure email and other sensitive transactions and data.

According to documents the paper obtained from Snowden, GCHQ had specifically been working to develop ways into the encrypted traffic of Google, Yahoo, Facebook, and Hotmail to decrypt traffic in near-real time; and in 2010, there was documentation that suggested that they might have succeeded. Although this was two years before the Heartbleed vulnerability existed, it does serve to highlight the agency’s efforts to get at encrypted traffic.

According to documents the paper obtained from Snowden, GCHQ had specifically been working to develop ways into the encrypted traffic of Google, Yahoo, Facebook, and Hotmail to decrypt traffic in near-real time; and in 2010, there was documentation that suggested that they might have succeeded. Although this was two years before the Heartbleed vulnerability existed, it does serve to highlight the agency’s efforts to get at encrypted traffic.

For some time now, security experts have speculated about whether the NSA cracked SSL communications; and if so, how the agency might have accomplished the feat. But now, the existence of Heartbleed raises the possibility that in some cases, the NSA might not have needed to crack SSL at all. Instead, it’s possible the agency simply used the vulnerability to obtain the private keys of web-based companies to decrypt their traffic.

Though security vulnerabilities come and go, this one is deemed catastrophic because it’s at the core of SSL, the encryption protocol trusted by so many to protect their data. And beyond abuse by government sources, the bug is also worrisome because it could possibly be used by hackers to steal usernames and passwords for sensitive services like banking, ecommerce, and email. In short, it empowers individual troublemakers everywhere by ensuring that the locks on our information can be exploited by anyone who knows how to do it.

Though security vulnerabilities come and go, this one is deemed catastrophic because it’s at the core of SSL, the encryption protocol trusted by so many to protect their data. And beyond abuse by government sources, the bug is also worrisome because it could possibly be used by hackers to steal usernames and passwords for sensitive services like banking, ecommerce, and email. In short, it empowers individual troublemakers everywhere by ensuring that the locks on our information can be exploited by anyone who knows how to do it.

Matt Blaze, a cryptographer and computer security professor at the University of Pennsylvania, claims that “It really is the worst and most widespread vulnerability in SSL that has come out.” The Electronic Frontier Foundation, Ars Technica, and Bruce Schneier all deemed the Heartbleed bug “catastrophic”, and Forbes cybersecurity columnist Joseph Steinberg event went as far as to say that:

Some might argue that [Heartbleed] is the worst vulnerability found (at least in terms of its potential impact) since commercial traffic began to flow on the Internet.

Regardless, Heartbleed does point to a much larger problem with the design of the internet. Some of its most important pieces are controlled by just a handful of people, many of whom aren’t paid well — or aren’t paid at all. In short, Heartbleed has shown that more oversight is needed to protect the internet’s underlying infrastructure. And the sad truth is that open source software — which underpins vast swathes of the net — has a serious sustainability problem.

Regardless, Heartbleed does point to a much larger problem with the design of the internet. Some of its most important pieces are controlled by just a handful of people, many of whom aren’t paid well — or aren’t paid at all. In short, Heartbleed has shown that more oversight is needed to protect the internet’s underlying infrastructure. And the sad truth is that open source software — which underpins vast swathes of the net — has a serious sustainability problem.

Another problem is money, in that important projects just aren’t getting enough of it. Whereas well-known projects such as Linux, Mozilla, and the Apache web server enjoy hundreds of millions of dollars in annual funding, projects like the OpenSSL Software Foundation – which are forced to raise money for the project’s software development – have never raised more than $1 million in a year. To top it all off, there are issues when it comes to the open source ecosystem itself.

Typically, projects start when developers need to fix a particular problem; and when they open source their solution, it’s instantly available to everyone. If the problem they address is common, the software can become wildly popular overnight. As a result, some projects never get the full attention from developers they deserve. Steve Marquess, one of the OpenSSL foundation’s partners, believes that part of the problem is that whereas people can see and touch their web browsers and Linux, they are out of touch with the cryptographic library.

Typically, projects start when developers need to fix a particular problem; and when they open source their solution, it’s instantly available to everyone. If the problem they address is common, the software can become wildly popular overnight. As a result, some projects never get the full attention from developers they deserve. Steve Marquess, one of the OpenSSL foundation’s partners, believes that part of the problem is that whereas people can see and touch their web browsers and Linux, they are out of touch with the cryptographic library.

In the end, the only real solutions is in informing the public. Since internet security affects us all, and the processes by which we secure our information is entrusted to too few hands, then the immediate solution is to widen the scope of inquiry and involvement. It also wouldn’t hurt to commit additional resources to the process of monitoring and securing the web, thereby ensuring that spy agencies and private individuals are not exercising too much or control over it, or able to do clandestine things with it.

In the meantime, the researchers from Codenomicon have set up a website with more detailed information. Click here to access it and see what you can do to protect yourself.

Sources: cbc.ca, wired.com, (2), heartbleed.com

It’s no secret that amongst its many cooky and futuristic projects, self-driving cars are something Google hopes to make real within the next few years. Late last month, Google’s fleet of autonomous automobiles reached an important milestone. After many years of testing out on the roads of California and Nevada, they logged well 0ver one-million kilometers (700,000 miles) of accident-free driving. To celebrate, Google has released a new video that demonstrates some impressive software improvements that have been made over the last two years.

It’s no secret that amongst its many cooky and futuristic projects, self-driving cars are something Google hopes to make real within the next few years. Late last month, Google’s fleet of autonomous automobiles reached an important milestone. After many years of testing out on the roads of California and Nevada, they logged well 0ver one-million kilometers (700,000 miles) of accident-free driving. To celebrate, Google has released a new video that demonstrates some impressive software improvements that have been made over the last two years. In the video, we see the Google’s car reacting to railroad crossings, large stationary objects, roadwork signs and cones, and cyclists. In the case of the cyclist — not only are the cars able to discern whether the cyclist wants to move left or right, it even watches out for cyclists coming from behind when making a right turn. And while the demo certainly makes the whole process seem easy and fluid, there is actually a considerable amount of work going on behind the scenes.

In the video, we see the Google’s car reacting to railroad crossings, large stationary objects, roadwork signs and cones, and cyclists. In the case of the cyclist — not only are the cars able to discern whether the cyclist wants to move left or right, it even watches out for cyclists coming from behind when making a right turn. And while the demo certainly makes the whole process seem easy and fluid, there is actually a considerable amount of work going on behind the scenes. While a lot has been said about the expensive LIDAR hardware, the most impressive aspect of the innovations is the computer vision. While LIDAR provides a very good idea of the lay of the land and the position of large objects (like parked cars), it doesn’t help with spotting speed limits or “construction ahead” signs, and whether what’s ahead is a cyclist or a railroad crossing barrier. And Google has certainly demonstrated plenty of adeptness in the past, what with their latest versions of Street View and their Google Glass project.

While a lot has been said about the expensive LIDAR hardware, the most impressive aspect of the innovations is the computer vision. While LIDAR provides a very good idea of the lay of the land and the position of large objects (like parked cars), it doesn’t help with spotting speed limits or “construction ahead” signs, and whether what’s ahead is a cyclist or a railroad crossing barrier. And Google has certainly demonstrated plenty of adeptness in the past, what with their latest versions of Street View and their Google Glass project.

Beyond Japan, solar power is considered the of front runner of alternative energy, at least until s fusion power comes of age. But Until such time as a fusion reaction can be triggered that produces substantially more energy than is required to initiate it, solar will remain the only

Beyond Japan, solar power is considered the of front runner of alternative energy, at least until s fusion power comes of age. But Until such time as a fusion reaction can be triggered that produces substantially more energy than is required to initiate it, solar will remain the only  Luckily, putting arrays into orbit solves both of these issues. Above the

Luckily, putting arrays into orbit solves both of these issues. Above the

Another consequence of the bug is the impetus it has given to conspiracy theorists who believe it may be part of a government-sanctioned ploy. Given recent revelations about the NSA’s extensive efforts to eavesdrop on internet activity and engage in cyberwarfare, this is hardly a surprise. Nor would it be the first time, as anyone who recalls the case made for the NIST SP800-90 Dual Ec Prng program – a pseudorandom number generator is used extensively in cryptography – acting as a “backdoor” for the

Another consequence of the bug is the impetus it has given to conspiracy theorists who believe it may be part of a government-sanctioned ploy. Given recent revelations about the NSA’s extensive efforts to eavesdrop on internet activity and engage in cyberwarfare, this is hardly a surprise. Nor would it be the first time, as anyone who recalls the case made for the NIST SP800-90 Dual Ec Prng program – a pseudorandom number generator is used extensively in cryptography – acting as a “backdoor” for the

Though security vulnerabilities come and go, this one is deemed catastrophic because it’s at the core of SSL, the encryption protocol trusted by so many to protect their data. And beyond abuse by government sources, the bug is also worrisome because it could possibly be used by hackers to steal usernames and passwords for sensitive services like banking, ecommerce, and email. In short, it empowers individual troublemakers everywhere by ensuring that the locks on our information can be exploited by anyone who knows how to do it.

Though security vulnerabilities come and go, this one is deemed catastrophic because it’s at the core of SSL, the encryption protocol trusted by so many to protect their data. And beyond abuse by government sources, the bug is also worrisome because it could possibly be used by hackers to steal usernames and passwords for sensitive services like banking, ecommerce, and email. In short, it empowers individual troublemakers everywhere by ensuring that the locks on our information can be exploited by anyone who knows how to do it. Regardless, Heartbleed does point to a much larger problem with the design of the internet. Some of its most important pieces are controlled by just a handful of people, many of whom aren’t paid well — or aren’t paid at all. In short, Heartbleed has shown that more oversight is needed to protect the internet’s underlying infrastructure. And the sad truth is that open source software — which underpins vast swathes of the net — has a serious sustainability problem.

Regardless, Heartbleed does point to a much larger problem with the design of the internet. Some of its most important pieces are controlled by just a handful of people, many of whom aren’t paid well — or aren’t paid at all. In short, Heartbleed has shown that more oversight is needed to protect the internet’s underlying infrastructure. And the sad truth is that open source software — which underpins vast swathes of the net — has a serious sustainability problem.

Presumably SpaceX will provide another update in due course. In the meantime, they took the opportunity to release a rather awesome video of

Presumably SpaceX will provide another update in due course. In the meantime, they took the opportunity to release a rather awesome video of

As Musk himself explained in a series of public statements and interviews after the launch:

As Musk himself explained in a series of public statements and interviews after the launch:

And that’s not only discovery of cosmic significance that was made in recent months. In this case, the news comes from NASA’s Fermi Gamma-ray Space Telescope, which has been analyzing high-energy gamma rays emanating from the galaxy’s center since 2008. After pouring over the results, an independent group of scientists claimed that they had found an unexplained source of emissions that they say is “consistent with some forms of dark matter.”

And that’s not only discovery of cosmic significance that was made in recent months. In this case, the news comes from NASA’s Fermi Gamma-ray Space Telescope, which has been analyzing high-energy gamma rays emanating from the galaxy’s center since 2008. After pouring over the results, an independent group of scientists claimed that they had found an unexplained source of emissions that they say is “consistent with some forms of dark matter.”

The galactic center teems with gamma-ray sources, from interacting binary systems and isolated pulsars to supernova remnants and particles colliding with interstellar gas. It’s also where astronomers expect to find the galaxy’s highest density of dark matter, which only affects normal matter and radiation through its gravity. Large amounts of dark matter attract normal matter, forming a foundation upon which visible structures, like galaxies, are built.

The galactic center teems with gamma-ray sources, from interacting binary systems and isolated pulsars to supernova remnants and particles colliding with interstellar gas. It’s also where astronomers expect to find the galaxy’s highest density of dark matter, which only affects normal matter and radiation through its gravity. Large amounts of dark matter attract normal matter, forming a foundation upon which visible structures, like galaxies, are built.

As a result, scientists are looking to come up with creative solutions to the problem. One such concept is the Green Machine – a

As a result, scientists are looking to come up with creative solutions to the problem. One such concept is the Green Machine – a

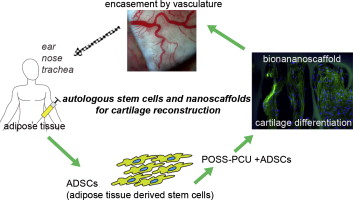

There is also the potential to begin reconstructive treatment with stem cells derived from adipose tissue earlier than previously possible, as it takes time for the ribs to grow enough cartilage to undergo the procedure. As Dr. Patrizia Ferretti, a researcher working on the project, said in a recent interview:

There is also the potential to begin reconstructive treatment with stem cells derived from adipose tissue earlier than previously possible, as it takes time for the ribs to grow enough cartilage to undergo the procedure. As Dr. Patrizia Ferretti, a researcher working on the project, said in a recent interview: